100 billion nerve cells in the human brain support complex cognitive processes by hierarchically processing massive amounts of incoming information from the external world. For instance, each processing stage in the neural hierarchy responsible for visual perception can be considered a computational unit that extracts visual features relevant for object perception from light falling onto the photoreceptor array in the retina. Therefore, locating the different stages of cognitive processing in the brain and identifying the types of information extracted at each stage are among the most important neuroscience problems currently.

To answer these important questions, we develop innovative functional magnetic resonance imaging (fMRI) and synergistic machine learning techniques. With the unique technology we develop, the neural responses elicited in the brain during natural vision, hearing and language perception can be detected with a unique sensitivity. In addition to a detailed study of the structure and function of the brain’s sub-systems, these techniques also make it possible to read the brain – to analyze the content of conscious perception from brain activity alone.

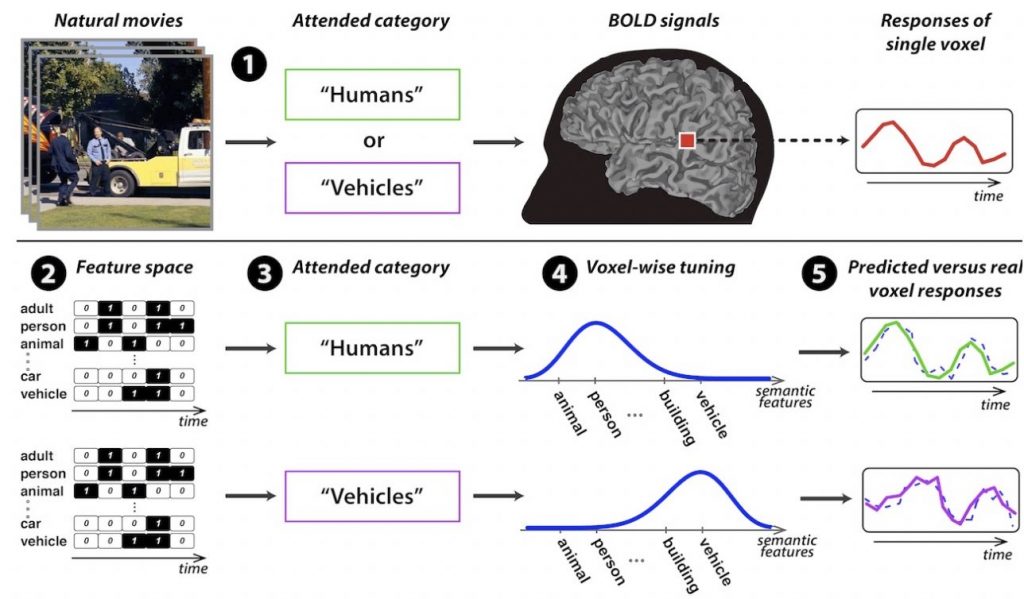

An exemplary application area is mapping of the visual cortex in the human brain, with detailed mathematical models that accurately capture the input-output relationships. Although real world scenes are cluttered with many different objects, people are extremely skilled at finding target objects in natural settings and quickly switching their attention between different targets. The exact neural mechanisms mediating this extraordinary ability are not yet known. Previous studies have reported relatively simple mechanisms that improve the quality of brain activity evoked by participating objects, without affecting how information is represented in each brain region. However, since there are a limited number of cortical neurons, it is unlikely that all brain regions will maintain constant representations, regardless of behavioral demands.

To study the nature of neural representations during visual search, we are leveraging a powerful computational modeling technique to describe the relationship between complex natural films and visual information and brain activity. We analyze the correlations between high-dimensional imaging data and high-level visual stimuli with advanced machine learning techniques.

Sample publications:

Yilmaz O, Celik E, Çukur T. Informed Feature Regularization in Voxelwise Modeling for Naturalistic fMRI Experiments European Journal of Neuroscience. 2020.

Shahdloo M, Celik E, Çukur T. Biased competition in semantic representation during natural visual search. Neuroimage. 2019.

Celik E, Dar SUH, Yilmaz O, Keles U, Çukur T. Spatially informed voxel-wise modeling for naturalistic fMRI experiments. Neuroimage. 2019 Feb;186:741-57.

Çukur T, Huth AG, Nishimoto S, Gallant JL. Functional subdomains within scene-selective cortex: Parahippocampal Place Area, Retrosplenial Complex, and Occipital Place Area. Journal of Neuroscience. 2016 Oct;36(40):10257-73.

Çukur T, Nishimoto S, Huth AG, Gallant JL. Attention during natural vision warps semantic representation across the human brain. Nature Neuroscience. 2013 Jun;16(6):763-70.